Tutorial: Remotely tracing an embedded Linux system

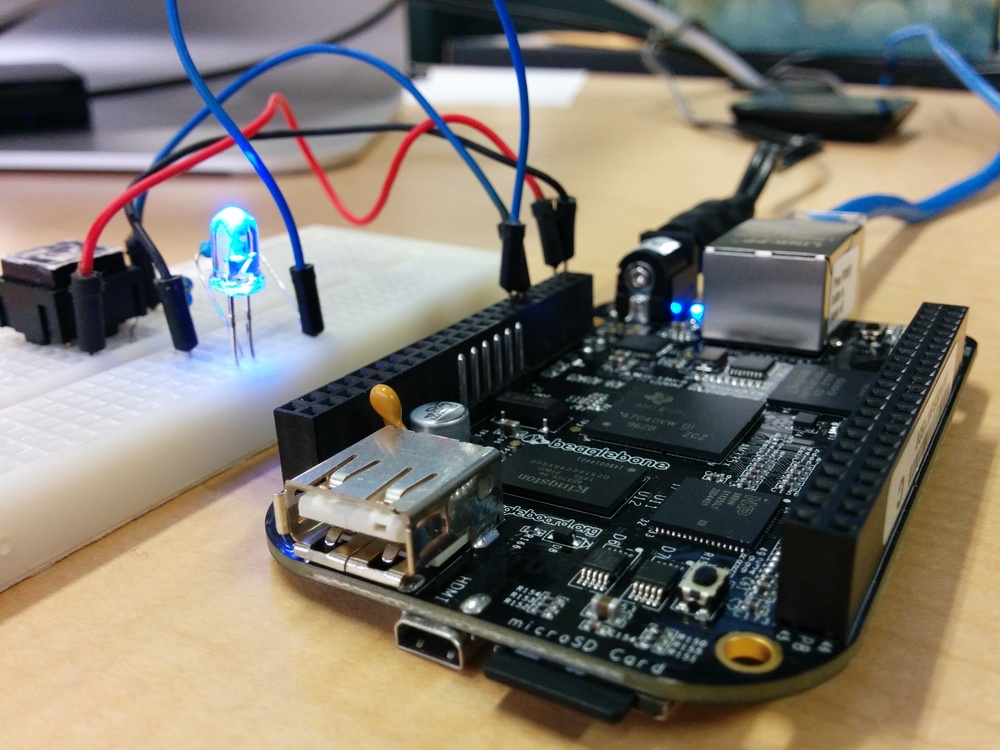

BeagleBone Black with GPIO test circuit.

Embedded systems usually present some kind of constraints, such as

limited computing power, minimal amount of memory, low or nonexistent

storage, etc. Debugging embedded systems within those constraints can be

a real challenge for developers. Sometimes, developers will fallback to

logging to a file with their beloved printf() in order to find the

issues in their software stack. Keeping these log files on the system

might not be possible.

The goal of this post is to describe a way to debug an embedded system with tracing and to stream the resulting traces to a remote machine.