The LTTng Documentation

Copyright © 2014-2021 The LTTng Project

This work is licensed under a Creative Commons Attribution 4.0 International License.

Warning:This version of the LTTng Documentation is not maintained anymore, since the corresponding version of LTTng is not the current release, nor the previous release.

Visit the latest LTTng Documentation version.

Welcome!

Welcome to the LTTng Documentation!

The Linux Trace Toolkit: next generation is an open source software toolkit which you can use to simultaneously trace the Linux kernel, user applications, and user libraries.

LTTng consists of:

-

Kernel modules to trace the Linux kernel.

-

Shared libraries to trace user applications written in C or C++.

-

Java packages to trace Java applications which use

java.util.loggingor Apache log4j 1.2. -

A kernel module to trace shell scripts and other user applications without a dedicated instrumentation mechanism.

-

Daemons and a command-line tool,

lttng, to control the LTTng tracers.

Open source documentation

Note:This is an open documentation: its source is available in a public Git repository.

Should you find any error in the content of this text, any grammatical mistake, or any dead link, we would be very grateful if you would file a GitHub issue for it or, even better, contribute a patch to this documentation by creating a pull request.

Target audience

The material of this documentation is appropriate for intermediate to advanced software developers working in a Linux environment and interested in efficient software tracing. LTTng is also worth a try for students interested in the inner mechanics of their systems.

If you do not have a programming background, you may wish to skip everything related to instrumentation, which often requires at least some programming language skills.

Chapter descriptions

What follows is a list of brief descriptions of this documentation’s chapters. The latter are ordered in such a way as to make the reading as linear as possible.

-

Nuts and bolts explains the rudiments of software tracing and the rationale behind the LTTng project.

-

Installing LTTng is divided into sections describing the steps needed to get a working installation of LTTng packages for common Linux distributions and from its source.

-

Getting started is a very concise guide to get started quickly with LTTng kernel and user space tracing. This chapter is recommended if you’re new to LTTng or software tracing in general.

-

Understanding LTTng deals with some core concepts and components of the LTTng suite. Understanding those is important since the next chapter assumes you’re familiar with them.

-

Using LTTng is a complete user guide of the LTTng project. It shows in great details how to instrument user applications and the Linux kernel, how to control tracing sessions using the

lttngcommand line tool and miscellaneous practical use cases. -

Reference contains references of LTTng components, like links to online manpages and various APIs.

We recommend that you read the above chapters in this order, although some of them may be skipped depending on your situation. You may skip Nuts and bolts if you’re familiar with tracing and LTTng. Also, you may jump over Installing LTTng if LTTng is already properly installed on your target system.

Convention

Function names, parameter names, variable names, command names, argument

names, file system paths, file names, and other literal strings are

written using a monospace typeface in this document. An italic

word within such a block is a placeholder, usually described in the

following sentence.

Practical tips and notes are given throughout the document using the following style:

Tip:Read the tips.

Terminal boxes are used to show command lines:

$ #

echo Command line as a regular user echo Command line as a the `root` user

Command lines which you need to execute as a priviledged user start with

the # prompt or with sudo. Other command lines start with the $

prompt.

Acknowledgements

A few people made the online LTTng Documentation possible.

Philippe Proulx wrote most of the content, created the diagrams, and formatted the document. He’s the current maintainer of the LTTng Documentation.

Daniel U. Thibault, from the DRDC, wrote “LTTng: The Linux Trace Toolkit Next Generation — A Comprehensive User’s Guide (version 2.3 edition)” which was used to complete parts of the “Core concepts” and “Components of LTTng” sections and for a few passages here and there.

The entire EfficiOS team made essential reviews of the whole document.

We sincerely thank everyone who helped enhance the quality of this documentation.

What’s new in LTTng 2.6?

Most of the changes of LTTng 2.6 are bug fixes, making the toolchain more stable than ever before. Still, LTTng 2.6 adds some interesting features to the project.

LTTng 2.5 already supported the instrumentation and tracing of

Java applications through java.util.logging

(JUL). LTTng 2.6 goes one step further by supporting

Apache log4j 1.2.

The new log4j domain is selected using the --log4j option in various

commands of the lttng tool.

LTTng-modules has supported system call tracing for a long time, but until now, it was only possible to record either all of them, or none of them. LTTng 2.6 allows the user to record specific system call events, for example:

lttng enable-event --kernel --syscall open,fork,chdir,pipe

Finally, the lttng command line tool is not only able to communicate

with humans as it used to do, but also with machines thanks to its new

machine interface feature.

To learn more about the new features of LTTng 2.6, see the release announcement.

Nuts and bolts

What is LTTng? As its name suggests, the Linux Trace Toolkit: next generation is a modern toolkit for tracing Linux systems and applications. So your first question might rather be: what is tracing?

What is tracing?

As the history of software engineering progressed and led to what we now take for granted—complex, numerous and interdependent software applications running in parallel on sophisticated operating systems like Linux—the authors of such components, or software developers, began feeling a natural urge of having tools to ensure the robustness and good performance of their masterpieces.

One major achievement in this field is, inarguably, the GNU debugger (GDB), which is an essential tool for developers to find and fix bugs. But even the best debugger won’t help make your software run faster, and nowadays, faster software means either more work done by the same hardware, or cheaper hardware for the same work.

A profiler is often the tool of choice to identify performance bottlenecks. Profiling is suitable to identify where performance is lost in a given software; the profiler outputs a profile, a statistical summary of observed events, which you may use to discover which functions took the most time to execute. However, a profiler won’t report why some identified functions are the bottleneck. Bottlenecks might only occur when specific conditions are met, sometimes almost impossible to capture by a statistical profiler, or impossible to reproduce with an application altered by the overhead of an event-based profiler. For a thorough investigation of software performance issues, a history of execution, with the recorded values of chosen variables and context, is essential. This is where tracing comes in handy.

Tracing is a technique used to understand what goes on in a running software system. The software used for tracing is called a tracer, which is conceptually similar to a tape recorder. When recording, specific probes placed in the software source code generate events that are saved on a giant tape: a trace file. Both user applications and the operating system may be traced at the same time, opening the possibility of resolving a wide range of problems that are otherwise extremely challenging.

Tracing is often compared to logging. However, tracers and loggers are two different tools, serving two different purposes. Tracers are designed to record much lower-level events that occur much more frequently than log messages, often in the thousands per second range, with very little execution overhead. Logging is more appropriate for very high-level analysis of less frequent events: user accesses, exceptional conditions (errors and warnings, for example), database transactions, instant messaging communications, and such. More formally, logging is one of several use cases that can be accomplished with tracing.

The list of recorded events inside a trace file may be read manually like a log file for the maximum level of detail, but it is generally much more interesting to perform application-specific analyses to produce reduced statistics and graphs that are useful to resolve a given problem. Trace viewers and analysers are specialized tools designed to do this.

So, in the end, this is what LTTng is: a powerful, open source set of tools to trace the Linux kernel and user applications at the same time. LTTng is composed of several components actively maintained and developed by its community.

Alternatives to LTTng

Excluding proprietary solutions, a few competing software tracers exist for Linux:

-

ftrace is the de facto function tracer of the Linux kernel. Its user interface is a set of special files in sysfs.

-

perf is a performance analyzing tool for Linux which supports hardware performance counters, tracepoints, as well as other counters and types of probes. perf’s controlling utility is the

perfcommand line/curses tool. -

strace is a command line utility which records system calls made by a user process, as well as signal deliveries and changes of process state. strace makes use of ptrace to fulfill its function.

-

SystemTap is a Linux kernel and user space tracer which uses custom user scripts to produce plain text traces. Scripts are converted to the C language, then compiled as Linux kernel modules which are loaded to produce trace data. SystemTap’s primary user interface is the

stapcommand line tool. -

sysdig, like SystemTap, uses scripts to analyze Linux kernel events. Scripts, or chisels in sysdig’s jargon, are written in Lua and executed while the system is being traced, or afterwards. sysdig’s interface is the

sysdigcommand line tool as well as the curses-basedcsysdigtool.

The main distinctive features of LTTng is that it produces correlated kernel and user space traces, as well as doing so with the lowest overhead amongst other solutions. It produces trace files in the CTF format, an optimized file format for production and analyses of multi-gigabyte data. LTTng is the result of close to 10 years of active development by a community of passionate developers. LTTng 2.6 is currently available on some major desktop, server, and embedded Linux distributions.

The main interface for tracing control is a single command line tool

named lttng. The latter can create several tracing sessions,

enable/disable events on the fly, filter them efficiently with custom

user expressions, start/stop tracing, and do much more. Traces can be

recorded on disk or sent over the network, kept totally or partially,

and viewed once tracing becomes inactive or in real-time.

Install LTTng now and start tracing!

Installing LTTng

Not available

Warning:The installation documentation for distributions is not available because this version of the LTTng Documentation is not maintained anymore.

Visit the latest LTTng Documentation version.

LTTng is a set of software components which interact to allow instrumenting the Linux kernel and user applications as well as controlling tracing sessions (starting/stopping tracing, enabling/disabling events, and more). Those components are bundled into the following packages:

- LTTng-tools

-

Libraries and command line interface to control tracing sessions.

- LTTng-modules

-

Linux kernel modules for tracing the kernel.

- LTTng-UST

-

User space tracing library.

Most distributions mark the LTTng-modules and LTTng-UST packages as optional. Note that LTTng-modules is only required if you intend to trace the Linux kernel and LTTng-UST is only required if you intend to trace user space applications.

Getting started with LTTng

This is a small guide to get started quickly with LTTng kernel and user space tracing. For a more thorough understanding of LTTng and intermediate to advanced use cases and, see Understanding LTTng and Using LTTng.

Before reading this guide, make sure LTTng is installed. LTTng-tools is required. Also install LTTng-modules for tracing the Linux kernel and LTTng-UST for tracing your own user space applications. When the traces are finally written and complete, the Viewing and analyzing your traces section of this chapter will help you analyze your tracepoint events to investigate.

Tracing the Linux kernel

Make sure LTTng-tools and LTTng-modules packages are installed.

Since you’re about to trace the Linux kernel itself, let’s look at the

available kernel events using the lttng tool, which has a

Git-like command line structure:

lttng list --kernel

Before tracing, you need to create a session:

sudo lttng create

Tip:You can avoid using sudo in the previous and following commands

if your user is a member of the tracing group.

Let’s now enable some events for this session:

sudo lttng enable-event --kernel sched_switch,sched_process_fork

Or you might want to simply enable all available kernel events (beware that trace files grow rapidly when doing this):

sudo lttng enable-event --kernel --all

Start tracing:

sudo lttng start

By default, traces are saved in

\~/lttng-traces/name-date-time,

where name is the session name.

When you’re done tracing:

sudo lttng stop sudo lttng destroy

Although destroy looks scary here, it doesn’t actually destroy the

written trace files: it only destroys the tracing session.

What’s next? Have a look at Viewing and analyzing your traces to view and analyze the trace you just recorded.

Tracing your own user application

The previous section helped you create a trace out of Linux kernel events. This section steps you through a simple example showing you how to trace a Hello world program written in C.

Make sure the LTTng-tools and LTTng-UST packages are installed.

Tracing is just like having printf() calls at specific locations of

your source code, albeit LTTng is much faster and more flexible than

printf(). In the LTTng realm, tracepoint() is analogous to

printf().

Unlike printf(), though, tracepoint() does not use a format string to

know the types of its arguments: the formats of all tracepoints must be

defined before using them. So before even writing our Hello world program,

we need to define the format of our tracepoint. This is done by creating a

tracepoint provider, which consists of a tracepoint provider header

(.h file) and a tracepoint provider definition (.c file).

The tracepoint provider header contains some boilerplate as well as a

list of tracepoint definitions and other optional definition entries

which we skip for this quickstart. Each tracepoint is defined using the

TRACEPOINT_EVENT() macro. For each tracepoint, you must provide:

-

a provider name, which is the "scope" or namespace of this tracepoint (this usually includes the company and project names)

-

a tracepoint name

-

a list of arguments for the eventual

tracepoint()call, each item being:-

the argument C type

-

the argument name

-

-

a list of fields, which correspond to the actual fields of the recorded events for this tracepoint

Here’s an example of a simple tracepoint provider header with two arguments: an integer and a string:

#undef TRACEPOINT_PROVIDER #define TRACEPOINT_PROVIDER hello_world #undef TRACEPOINT_INCLUDE #define TRACEPOINT_INCLUDE "./hello-tp.h" #if !defined(_HELLO_TP_H) || defined(TRACEPOINT_HEADER_MULTI_READ) #define _HELLO_TP_H #include <lttng/tracepoint.h> TRACEPOINT_EVENT( hello_world, my_first_tracepoint, TP_ARGS( int, my_integer_arg, char*, my_string_arg ), TP_FIELDS( ctf_string(my_string_field, my_string_arg) ctf_integer(int, my_integer_field, my_integer_arg) ) ) #endif /* _HELLO_TP_H */ #include <lttng/tracepoint-event.h>

The exact syntax is well explained in the C application instrumentation guide of the Using LTTng chapter, as well as in lttng-ust(3).

Save the above snippet as hello-tp.h.

Write the tracepoint provider definition as hello-tp.c:

#define TRACEPOINT_CREATE_PROBES #define TRACEPOINT_DEFINE #include "hello-tp.h"

Create the tracepoint provider:

gcc -c -I. hello-tp.c

Now, by including hello-tp.h in your own application, you may use the

tracepoint defined above by properly refering to it when calling

tracepoint():

#include <stdio.h> #include "hello-tp.h" int main(int argc, char *argv[]) { int x; puts("Hello, World!\nPress Enter to continue..."); /* * The following getchar() call is only placed here for the purpose * of this demonstration, for pausing the application in order for * you to have time to list its events. It's not needed otherwise. */ getchar(); /* * A tracepoint() call. Arguments, as defined in hello-tp.h: * * 1st: provider name (always) * 2nd: tracepoint name (always) * 3rd: my_integer_arg (first user-defined argument) * 4th: my_string_arg (second user-defined argument) * * Notice the provider and tracepoint names are NOT strings; * they are in fact parts of variables created by macros in * hello-tp.h. */ tracepoint(hello_world, my_first_tracepoint, 23, "hi there!"); for (x = 0; x < argc; ++x) { tracepoint(hello_world, my_first_tracepoint, x, argv[x]); } puts("Quitting now!"); tracepoint(hello_world, my_first_tracepoint, x * x, "x^2"); return 0; }

Save this as hello.c, next to hello-tp.c.

Notice hello-tp.h, the tracepoint provider header, is included

by hello.c.

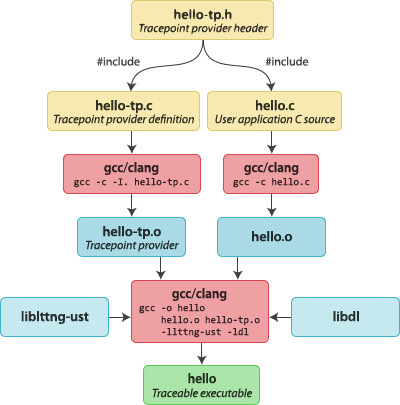

You are now ready to compile the application with LTTng-UST support:

gcc -c hello.c gcc -o hello hello.o hello-tp.o -llttng-ust -ldl

Here’s the whole build process:

If you followed the Tracing the Linux kernel tutorial, the following steps should look familiar.

First, run the application with a few arguments:

./hello world and beyond

You should see

Hello, World! Press Enter to continue...

Use the lttng tool to list all available user space events:

lttng list --userspace

You should see the hello_world:my_first_tracepoint tracepoint listed

under the ./hello process.

Create a tracing session:

lttng create

Enable the hello_world:my_first_tracepoint tracepoint:

lttng enable-event --userspace hello_world:my_first_tracepoint

Start tracing:

lttng start

Go back to the running hello application and press Enter. All tracepoint()

calls are executed and the program finally exits.

Stop tracing:

lttng stop

Done! You may use lttng view to list the recorded events. This command

starts babeltrace

in the background, if it’s installed:

lttng view

should output something like:

[18:10:27.684304496] (+?.?????????) hostname hello_world:my_first_tracepoint: { cpu_id = 0 }, { my_string_field = "hi there!", my_integer_field = 23 }

[18:10:27.684338440] (+0.000033944) hostname hello_world:my_first_tracepoint: { cpu_id = 0 }, { my_string_field = "./hello", my_integer_field = 0 }

[18:10:27.684340692] (+0.000002252) hostname hello_world:my_first_tracepoint: { cpu_id = 0 }, { my_string_field = "world", my_integer_field = 1 }

[18:10:27.684342616] (+0.000001924) hostname hello_world:my_first_tracepoint: { cpu_id = 0 }, { my_string_field = "and", my_integer_field = 2 }

[18:10:27.684343518] (+0.000000902) hostname hello_world:my_first_tracepoint: { cpu_id = 0 }, { my_string_field = "beyond", my_integer_field = 3 }

[18:10:27.684357978] (+0.000014460) hostname hello_world:my_first_tracepoint: { cpu_id = 0 }, { my_string_field = "x^2", my_integer_field = 16 }When you’re done, you may destroy the tracing session, which does not destroy the generated trace files, leaving them available for further analysis:

lttng destroy

The next section presents other alternatives to view and analyze your LTTng traces.

Viewing and analyzing your traces

This section describes how to visualize the data gathered after tracing the Linux kernel or a user space application.

Many ways exist to read LTTng traces:

-

babeltraceis a command line utility which converts trace formats; it supports the format used by LTTng, CTF, as well as a basic text output which may begreped. Thebabeltracecommand is part of the Babeltrace project. -

Babeltrace also includes Python bindings so that you may easily open and read an LTTng trace with your own script, benefiting from the power of Python.

-

Trace Compass is an Eclipse plugin used to visualize and analyze various types of traces, including LTTng’s. It also comes as a standalone application.

LTTng trace files are usually recorded in the ~/lttng-traces directory.

Let’s now view the trace and perform a basic analysis using

babeltrace.

The simplest way to list all the recorded events of a trace is to pass its

path to babeltrace with no options:

babeltrace ~/lttng-traces/my-session

babeltrace finds all traces recursively within the given path and

prints all their events, merging them in order of time.

Listing all the system calls of a Linux kernel trace with their arguments is

easy with babeltrace and grep:

babeltrace ~/lttng-traces/my-kernel-session | grep sys_

Counting events is also straightforward:

babeltrace ~/lttng-traces/my-kernel-session | grep sys_read | wc --lines

The text output of babeltrace is useful for isolating events by simple

matching using grep and similar utilities. However, more elaborate filters

such as keeping only events with a field value falling within a specific range

are not trivial to write using a shell. Moreover, reductions and even the

most basic computations involving multiple events are virtually impossible

to implement.

Fortunately, Babeltrace ships with Python 3 bindings which makes it really easy to read the events of an LTTng trace sequentially and compute the desired information.

Here’s a simple example using the Babeltrace Python bindings. The following script accepts an LTTng Linux kernel trace path as its first argument and prints the short names of the top 5 running processes on CPU 0 during the whole trace:

import sys from collections import Counter import babeltrace def top5proc(): if len(sys.argv) != 2: msg = 'Usage: python {} TRACEPATH'.format(sys.argv[0]) raise ValueError(msg) # a trace collection holds one to many traces col = babeltrace.TraceCollection() # add the trace provided by the user # (LTTng traces always have the 'ctf' format) if col.add_trace(sys.argv[1], 'ctf') is None: raise RuntimeError('Cannot add trace') # this counter dict will hold execution times: # # task command name -> total execution time (ns) exec_times = Counter() # this holds the last `sched_switch` timestamp last_ts = None # iterate events for event in col.events: # keep only `sched_switch` events if event.name != 'sched_switch': continue # keep only events which happened on CPU 0 if event['cpu_id'] != 0: continue # event timestamp cur_ts = event.timestamp if last_ts is None: # we start here last_ts = cur_ts # previous task command (short) name prev_comm = event['prev_comm'] # initialize entry in our dict if not yet done if prev_comm not in exec_times: exec_times[prev_comm] = 0 # compute previous command execution time diff = cur_ts - last_ts # update execution time of this command exec_times[prev_comm] += diff # update last timestamp last_ts = cur_ts # display top 10 for name, ns in exec_times.most_common(5): s = ns / 1000000000 print('{:20}{} s'.format(name, s)) if __name__ == '__main__': top5proc()

Save this script as top5proc.py and run it with Python 3, providing the

path to an LTTng Linux kernel trace as the first argument:

python3 top5proc.py ~/lttng-sessions/my-session-.../kernel

Make sure the path you provide is the directory containing actual trace

files (channel0_0, metadata, and the rest): the babeltrace utility

recurses directories, but the Python bindings do not.

Here’s an example of output:

swapper/0 48.607245889 s chromium 7.192738188 s pavucontrol 0.709894415 s Compositor 0.660867933 s Xorg.bin 0.616753786 s

Note that swapper/0 is the "idle" process of CPU 0 on Linux; since we

weren’t using the CPU that much when tracing, its first position in the list

makes sense.

Understanding LTTng

If you’re going to use LTTng in any serious way, it is fundamental that you become familiar with its core concepts. Technical terms like tracing sessions, domains, channels and events are used over and over in the Using LTTng chapter, and it is assumed that you understand what they mean when reading it.

LTTng, as you already know, is a toolkit. It would be wrong to call it a simple tool since it is composed of multiple interacting components. This chapter also describes the latter, providing details about their respective roles and how they connect together to form the current LTTng ecosystem.

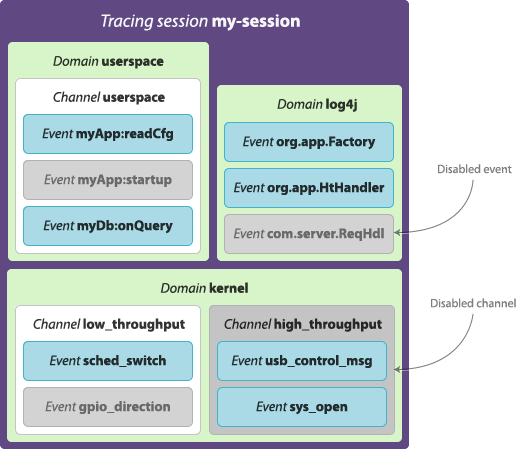

Core concepts

This section explains the various elementary concepts a user has to deal with when using LTTng. They are:

Tracing session

A tracing session is—like any session—a container of state. Anything that is done when tracing using LTTng happens in the scope of a tracing session. In this regard, it is analogous to a bank website’s session: you can’t interact online with your bank account unless you are logged in a session, except for reading a few static webpages (LTTng, too, can report some static information that does not need a created tracing session).

A tracing session holds the following attributes and objects (some of which are described in the following sections):

-

a name

-

the tracing state (tracing started or stopped)

-

the trace data output path/URL (local path or sent over the network)

-

a mode (normal, snapshot or live)

-

the snapshot output paths/URLs (if applicable)

-

for each channel:

-

a name

-

the channel state (enabled or disabled)

-

its parameters (event loss mode, sub-buffers size and count, timer periods, output type, trace files size and count, and the rest)

-

a list of added context information

-

a list of events

-

-

for each event:

-

its state (enabled or disabled)

-

a list of instrumentation points (tracepoints, system calls, dynamic probes, other types of probes)

-

associated log levels

-

a filter expression

-

All this information is completely isolated between tracing sessions. As you can see in the list above, even the tracing state is a per-tracing session attribute, so that you may trace your target system/application in a given tracing session with a specific configuration while another one stays inactive.

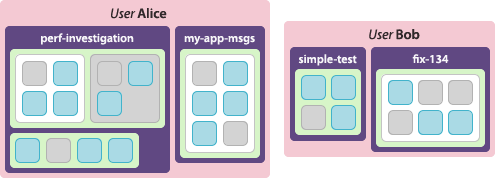

Conceptually, a tracing session is a per-user object; the Plumbing section shows how this is actually implemented. Any user may create as many concurrent tracing sessions as desired.

The trace data generated in a tracing session may be either saved to disk, sent over the network or not saved at all (in which case snapshots may still be saved to disk or sent to a remote machine).

Domain

A tracing domain is the official term the LTTng project uses to designate a tracer category.

There are currently four known domains:

-

Linux kernel

-

user space

-

java.util.logging(JUL) -

log4j

Different tracers expose common features in their own interfaces, but, from a user’s perspective, you still need to target a specific type of tracer to perform some actions. For example, since both kernel and user space tracers support named tracepoints (probes manually inserted in source code), you need to specify which one is concerned when enabling an event because both domains could have existing events with the same name.

Some features are not available in all domains. Filtering enabled events using custom expressions, for example, is currently not supported in the kernel domain, but support could be added in the future.

Channel

A channel is a set of events with specific parameters and potential added context information. Channels have unique names per domain within a tracing session. A given event is always registered to at least one channel; having the same enabled event in two channels makes this event being recorded twice everytime it occurs.

Channels may be individually enabled or disabled. Occurring events of a disabled channel never make it to recorded events.

The fundamental role of a channel is to keep a shared ring buffer, where events are eventually recorded by the tracer and consumed by a consumer daemon. This internal ring buffer is divided into many sub-buffers of equal size.

Channels, when created, may be fine-tuned thanks to a few parameters, many of them related to sub-buffers. The following subsections explain what those parameters are and in which situations you should manually adjust them.

Overwrite and discard event loss modes

As previously mentioned, a channel’s ring buffer is divided into many equally sized sub-buffers.

As events occur, they are serialized as trace data into a specific sub-buffer (yellow arc in the following animation) until it is full: when this happens, the sub-buffer is marked as consumable (red) and another, empty (white) sub-buffer starts receiving the following events. The marked sub-buffer is eventually consumed by a consumer daemon (returns to white).

In an ideal world, sub-buffers are consumed faster than filled, like it is the case above. In the real world, however, all sub-buffers could be full at some point, leaving no space to record the following events. By design, LTTng is a non-blocking tracer: when no empty sub-buffer exists, losing events is acceptable when the alternative would be to cause substantial delays in the instrumented application’s execution. LTTng privileges performance over integrity, aiming at perturbing the traced system as little as possible in order to make tracing of subtle race conditions and rare interrupt cascades possible.

When it comes to losing events because no empty sub-buffer is available, the channel’s event loss mode determines what to do amongst:

- Discard

-

Drop the newest events until a sub-buffer is released.

- Overwrite

-

Clear the sub-buffer containing the oldest recorded events and start recording the newest events there. This mode is sometimes called flight recorder mode because it behaves like a flight recorder: always keep a fixed amount of the latest data.

Which mechanism you should choose depends on your context: prioritize the newest or the oldest events in the ring buffer?

Beware that, in overwrite mode, a whole sub-buffer is abandoned as soon as a new event doesn’t find an empty sub-buffer, whereas in discard mode, only the event that doesn’t fit is discarded.

Also note that a count of lost events is incremented and saved in the trace itself when an event is lost in discard mode, whereas no information is kept when a sub-buffer gets overwritten before being committed.

There are known ways to decrease your probability of losing events. The next section shows how tuning the sub-buffers count and size can be used to virtually stop losing events.

Sub-buffers count and size

For each channel, an LTTng user may set its number of sub-buffers and their size.

Note that there is a noticeable tracer’s CPU overhead introduced when switching sub-buffers (marking a full one as consumable and switching to an empty one for the following events to be recorded). Knowing this, the following list presents a few practical situations along with how to configure sub-buffers for them:

- High event throughput

-

In general, prefer bigger sub-buffers to lower the risk of losing events. Having bigger sub-buffers also ensures a lower sub-buffer switching frequency. The number of sub-buffers is only meaningful if the channel is enabled in overwrite mode: in this case, if a sub-buffer overwrite happens, the other sub-buffers are left unaltered.

- Low event throughput

-

In general, prefer smaller sub-buffers since the risk of losing events is already low. Since events happen less frequently, the sub-buffer switching frequency should remain low and thus the tracer’s overhead should not be a problem.

- Low memory system

-

If your target system has a low memory limit, prefer fewer first, then smaller sub-buffers. Even if the system is limited in memory, you want to keep the sub-buffers as big as possible to avoid a high sub-buffer switching frequency.

You should know that LTTng uses CTF as its trace format, which means event data is very compact. For example, the average LTTng Linux kernel event weights about 32 bytes. A sub-buffer size of 1 MiB is thus considered big.

The previous situations highlight the major trade-off between a few big sub-buffers and more, smaller sub-buffers: sub-buffer switching frequency vs. how much data is lost in overwrite mode. Assuming a constant event throughput and using the overwrite mode, the two following configurations have the same ring buffer total size:

-

2 sub-buffers of 4 MiB each lead to a very low sub-buffer switching frequency, but if a sub-buffer overwrite happens, half of the recorded events so far (4 MiB) are definitely lost.

-

8 sub-buffers of 1 MiB each lead to 4 times the tracer’s overhead as the previous configuration, but if a sub-buffer overwrite happens, only the eighth of events recorded so far are definitely lost.

In discard mode, the sub-buffers count parameter is pointless: use two sub-buffers and set their size according to the requirements of your situation.

Switch timer

The switch timer period is another important configurable feature of channels to ensure periodic sub-buffer flushing.

When the switch timer fires, a sub-buffer switch happens. This timer may be used to ensure that event data is consumed and committed to trace files periodically in case of a low event throughput:

It’s also convenient when big sub-buffers are used to cope with sporadic high event throughput, even if the throughput is normally lower.

Buffering schemes

In the user space tracing domain, two buffering schemes are available when creating a channel:

- Per-PID buffering

-

Keep one ring buffer per process.

- Per-UID buffering

-

Keep one ring buffer for all processes of a single user.

The per-PID buffering scheme consumes more memory than the per-UID option if more than one process is instrumented for LTTng-UST. However, per-PID buffering ensures that one process having a high event throughput won’t fill all the shared sub-buffers, only its own.

The Linux kernel tracing domain only has one available buffering scheme which is to use a single ring buffer for the whole system.

Event

An event, in LTTng’s realm, is a term often used metonymically, having multiple definitions depending on the context:

-

When tracing, an event is a point in space-time. Space, in a tracing context, is the set of all executable positions of a compiled application by a logical processor. When a program is executed by a processor and some instrumentation point, or probe, is encountered, an event occurs. This event is accompanied by some contextual payload (values of specific variables at this point of execution) which may or may not be recorded.

-

In the context of a recorded trace file, the term event implies a recorded event.

-

When configuring a tracing session, enabled events refer to specific rules which could lead to the transfer of actual occurring events (1) to recorded events (2).

The whole Core concepts section focuses on the third definition. An event is always registered to one or more channels and may be enabled or disabled at will per channel. A disabled event never leads to a recorded event, even if its channel is enabled.

An event (3) is enabled with a few conditions that must all be met when an event (1) happens in order to generate a recorded event (2):

-

A probe or group of probes in the traced application must be executed.

-

Optionally, the probe must have a log level matching a log level range specified when enabling the event.

-

Optionally, the occurring event must satisfy a custom expression, or filter, specified when enabling the event.

Plumbing

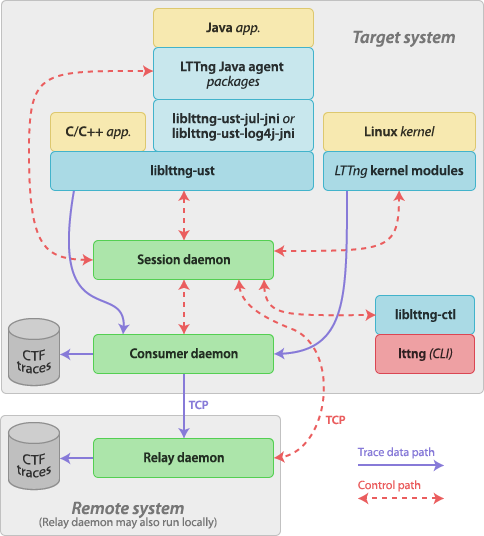

The previous section described the concepts at the heart of LTTng. This section summarizes LTTng’s implementation: how those objects are managed by different applications and libraries working together to form the toolkit.

Overview

As mentioned previously, the whole LTTng suite is made of the LTTng-tools, LTTng-UST, and LTTng-modules packages. Together, they provide different daemons, libraries, kernel modules and command line interfaces. The following tree shows which usable component belongs to which package:

-

LTTng-tools:

-

session daemon (

lttng-sessiond) -

consumer daemon (

lttng-consumerd) -

relay daemon (

lttng-relayd) -

tracing control library (

liblttng-ctl) -

tracing control command line tool (

lttng)

-

-

LTTng-UST:

-

user space tracing library (

liblttng-ust) and its headers -

preloadable user space tracing helpers (

liblttng-ust-libc-wrapper,liblttng-ust-pthread-wrapper,liblttng-ust-cyg-profile,liblttng-ust-cyg-profile-fastandliblttng-ust-dl) -

user space tracepoint code generator command line tool (

lttng-gen-tp) -

java.util.logging/log4j tracepoint providers (liblttng-ust-jul-jniandliblttng-ust-log4j-jni) and JAR file (liblttng-ust-agent.jar)

-

-

LTTng-modules:

-

LTTng Linux kernel tracer module

-

tracing ring buffer kernel modules

-

many LTTng probe kernel modules

-

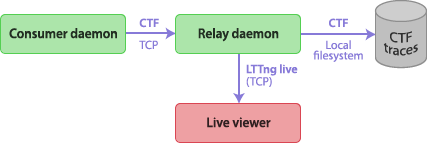

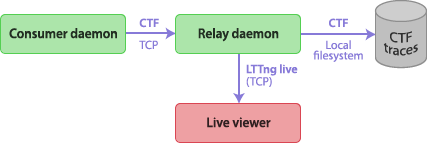

The following diagram shows how the most important LTTng components interact. Plain purple arrows represent trace data paths while dashed red arrows indicate control communications. The LTTng relay daemon is shown running on a remote system, although it could as well run on the target (monitored) system.

Each component is described in the following subsections.

Session daemon

At the heart of LTTng’s plumbing is the session daemon, often called

by its command name, lttng-sessiond.

The session daemon is responsible for managing tracing sessions and what they logically contain (channel properties, enabled/disabled events, and the rest). By communicating locally with instrumented applications (using LTTng-UST) and with the LTTng Linux kernel modules (LTTng-modules), it oversees all tracing activities.

One of the many things that lttng-sessiond does is to keep

track of the available event types. User space applications and

libraries actively connect and register to the session daemon when they

start. By contrast, lttng-sessiond seeks out and loads the appropriate

LTTng kernel modules as part of its own initialization. Kernel event

types are pulled by lttng-sessiond, whereas user space event types

are pushed to it by the various user space tracepoint providers.

Using a specific inter-process communication protocol with Linux kernel and user space tracers, the session daemon can send channel information so that they are initialized, enable/disable specific probes based on enabled/disabled events by the user, send event filters information to LTTng tracers so that filtering actually happens at the tracer site, start/stop tracing a specific application or the Linux kernel, and more.

The session daemon is not useful without some user controlling it,

because it’s only a sophisticated control interchange and thus

doesn’t make any decision on its own. lttng-sessiond opens a local

socket for controlling it, albeit the preferred way to control it is

using liblttng-ctl, an installed C library hiding the communication

protocol behind an easy-to-use API. The lttng tool makes use of

liblttng-ctl to implement a user-friendly command line interface.

lttng-sessiond does not receive any trace data from instrumented

applications; the consumer daemons are the programs responsible for

collecting trace data using shared ring buffers. However, the session

daemon is the one that must spawn a consumer daemon and establish

a control communication with it.

Session daemons run on a per-user basis. Knowing this, multiple

instances of lttng-sessiond may run simultaneously, each belonging

to a different user and each operating independently of the others.

Only root's session daemon, however, may control LTTng kernel modules

(that is, the kernel tracer). With that in mind, if a user has no root

access on the target system, he cannot trace the system’s kernel, but

should still be able to trace its own instrumented applications.

It has to be noted that, although only root's session daemon may

control the kernel tracer, the lttng-sessiond command has a --group

option which may be used to specify the name of a special user group

allowed to communicate with root's session daemon and thus record

kernel traces. By default, this group is named tracing.

If not done yet, the lttng tool, by default, automatically starts a

session daemon. lttng-sessiond may also be started manually:

lttng-sessiond

This starts the session daemon in foreground. Use

lttng-sessiond --daemonize

to start it as a true daemon.

To kill the current user’s session daemon, pkill may be used:

pkill lttng-sessiond

The default SIGTERM signal terminates it cleanly.

Several other options are available and described in

lttng-sessiond(8) or by running lttng-sessiond --help.

Consumer daemon

The consumer daemon, or lttng-consumerd, is a program sharing some

ring buffers with user applications or the LTTng kernel modules to

collect trace data and output it at some place (on disk or sent over

the network to an LTTng relay daemon).

Consumer daemons are created by a session daemon as soon as events are

enabled within a tracing session, well before tracing is activated

for the latter. Entirely managed by session daemons,

consumer daemons survive session destruction to be reused later,

should a new tracing session be created. Consumer daemons are always

owned by the same user as their session daemon. When its owner session

daemon is killed, the consumer daemon also exits. This is because

the consumer daemon is always the child process of a session daemon.

Consumer daemons should never be started manually. For this reason,

they are not installed in one of the usual locations listed in the

PATH environment variable. lttng-sessiond has, however, a

bunch of options (see lttng-sessiond(8)) to

specify custom consumer daemon paths if, for some reason, a consumer

daemon other than the default installed one is needed.

There are up to two running consumer daemons per user, whereas only one

session daemon may run per user. This is because each process has

independent bitness: if the target system runs a mixture of 32-bit and

64-bit processes, it is more efficient to have separate corresponding

32-bit and 64-bit consumer daemons. The root user is an exception: it

may have up to three running consumer daemons: 32-bit and 64-bit

instances for its user space applications and one more reserved for

collecting kernel trace data.

As new tracing domains are added to LTTng, the development community’s

intent is to minimize the need for additionnal consumer daemon instances

dedicated to them. For instance, the java.util.logging (JUL) domain

events are in fact mapped to the user space domain, thus tracing this

particular domain is handled by existing user space domain consumer

daemons.

Relay daemon

When a tracing session is configured to send its trace data over the

network, an LTTng relay daemon must be used at the other end to

receive trace packets and serialize them to trace files. This setup

makes it possible to trace a target system without ever committing trace

data to its local storage, a feature which is useful for embedded

systems, amongst others. The command implementing the relay daemon

is lttng-relayd.

The basic use case of lttng-relayd is to transfer trace data received

over the network to trace files on the local file system. The relay

daemon must listen on two TCP ports to achieve this: one control port,

used by the target session daemon, and one data port, used by the

target consumer daemon. The relay and session daemons agree on common

default ports when custom ones are not specified.

Since the communication transport protocol for both ports is standard TCP, the relay daemon may be started either remotely or locally (on the target system).

While two instances of consumer daemons (32-bit and 64-bit) may run

concurrently for a given user, lttng-relayd needs only be of its

host operating system’s bitness.

The other important feature of LTTng’s relay daemon is the support of LTTng live. LTTng live is an application protocol to view events as they arrive. The relay daemon still records events in trace files, but a tee allows to inspect incoming events.

Using LTTng live locally thus requires to run a local relay daemon.

Control library and command line interface

The LTTng control library, liblttng-ctl, can be used to communicate

with the session daemon using a C API that hides the underlying

protocol’s details. liblttng-ctl is part of LTTng-tools.

liblttng-ctl may be used by including its "master" header:

#include <lttng/lttng.h>

Some objects are referred by name (C string), such as tracing sessions,

but most of them require creating a handle first using

lttng_create_handle(). The best available developer documentation for

liblttng-ctl is, for the moment, its installed header files as such.

Every function/structure is thoroughly documented.

The lttng program is the de facto standard user interface to

control LTTng tracing sessions. lttng uses liblttng-ctl to

communicate with session daemons behind the scenes.

Its man page, lttng(1), is exhaustive, as well as its command

line help (lttng cmd --help, where cmd is the command name).

The Controlling tracing section is a feature

tour of the lttng tool.

User space tracing library

The user space tracing part of LTTng is possible thanks to the user

space tracing library, liblttng-ust, which is part of the LTTng-UST

package.

liblttng-ust provides header files containing macros used to define

tracepoints and create tracepoint providers, as well as a shared object

that must be linked to individual applications to connect to and

communicate with a session daemon and a consumer daemon as soon as the

application starts.

The exact mechanism by which an application is registered to the

session daemon is beyond the scope of this documentation. The only thing

you need to know is that, since the library constructor does this job

automatically, tracepoints may be safely inserted anywhere in the source

code without prior manual initialization of liblttng-ust.

The liblttng-ust-session daemon collaboration also provides an

interesting feature: user space events may be enabled before

applications actually start. By doing this and starting tracing before

launching the instrumented application, you make sure that even the

earliest occurring events can be recorded.

The C application instrumenting guide of the

Using LTTng chapter focuses on using liblttng-ust:

instrumenting, building/linking and running a user application.

LTTng kernel modules

The LTTng Linux kernel modules provide everything needed to trace the Linux kernel: various probes, a ring buffer implementation for a consumer daemon to read trace data and the tracer itself.

Only in exceptional circumstances should you ever need to load the

LTTng kernel modules manually: it is normally the responsability of

root's session daemon to do so. Even if you were to develop your

own LTTng probe module—for tracing a custom kernel or some kernel

module (this topic is covered in the

Linux kernel instrumenting guide of

the Using LTTng chapter)—you

should use the --extra-kmod-probes option of the session daemon to

append your probe to the default list. The session and consumer daemons

of regular users do not interact with the LTTng kernel modules at all.

LTTng kernel modules are installed, by default, in

/usr/lib/modules/release/extra, where release is the

kernel release (see uname --kernel-release).

Using LTTng

Using LTTng involves two main activities: instrumenting and controlling tracing.

Instrumenting is the process of inserting probes into some source code. It can be done manually, by writing tracepoint calls at specific locations in the source code of the program to trace, or more automatically using dynamic probes (address in assembled code, symbol name, function entry/return, and others).

It has to be noted that, as an LTTng user, you may not have to worry about the instrumentation process. Indeed, you may want to trace a program already instrumented. As an example, the Linux kernel is thoroughly instrumented, which is why you can trace it without caring about adding probes.

Controlling tracing is everything

that can be done by the LTTng session daemon, which is controlled using

liblttng-ctl or its command line utility, lttng: creating tracing

sessions, listing tracing sessions and events, enabling/disabling

events, starting/stopping the tracers, taking snapshots, amongst many

other commands.

This chapter is a complete user guide of both activities, with common use cases of LTTng exposed throughout the text. It is assumed that you are familiar with LTTng’s concepts (events, channels, domains, tracing sessions) and that you understand the roles of its components (daemons, libraries, command line tools); if not, we invite you to read the Understanding LTTng chapter before you begin reading this one.

If you’re new to LTTng, we suggest that you rather start with the Getting started small guide first, then come back here to broaden your knowledge.

If you’re only interested in tracing the Linux kernel with its current instrumentation, you may skip the Instrumenting section.

Instrumenting

There are many examples of tracing and monitoring in our everyday life. You have access to real-time and historical weather reports and forecasts thanks to weather stations installed around the country. You know your possibly hospitalized friends' and family’s hearts are safe thanks to electrocardiography. You make sure not to drive your car too fast and have enough fuel to reach your destination thanks to gauges visible on your dashboard.

All the previous examples have something in common: they rely on probes. Without electrodes attached to the surface of a body’s skin, cardiac monitoring would be futile.

LTTng, as a tracer, is no different from the real life examples above. If you’re about to trace a software system or, put in other words, record its history of execution, you better have probes in the subject you’re tracing: the actual software. Various ways were developed to do this. The most straightforward one is to manually place probes, called tracepoints, in the software’s source code. The Linux kernel tracing domain also allows probes added dynamically.

If you’re only interested in tracing the Linux kernel, it may very well be that your tracing needs are already appropriately covered by LTTng’s built-in Linux kernel tracepoints and other probes. Or you may be in possession of a user space application which has already been instrumented. In such cases, the work resides entirely in the design and execution of tracing sessions, allowing you to jump to Controlling tracing right now.

This chapter focuses on the following use cases of instrumentation:

-

Linux kernel module or the kernel itself

Some advanced techniques are also presented at the very end of this chapter.

C application

Instrumenting a C (or C++) application, be it an executable program

or a library, implies using LTTng-UST, the

user space tracing component of LTTng. For C/C++ applications, the

LTTng-UST package includes a dynamically loaded library

(liblttng-ust), C headers and the lttng-gen-tp command line utility.

Since C and C++ are the base languages of virtually all other programming languages (Java virtual machine, Python, Perl, PHP and Node.js interpreters, to name a few), implementing user space tracing for an unsupported language is just a matter of using the LTTng-UST C API at the right places.

The usual work flow to instrument a user space C application with LTTng-UST is:

-

Define tracepoints (actual probes)

-

Write tracepoint providers

-

Insert tracepoints into target source code

-

Package (build) tracepoint providers

-

Build user application and link it with tracepoint providers

The steps above are discussed in greater detail in the following subsections.

Tracepoint provider

Before jumping into defining tracepoints and inserting them into the application source code, you must understand what a tracepoint provider is.

For the sake of this guide, consider the following two files:

tp.h

#undef TRACEPOINT_PROVIDER #define TRACEPOINT_PROVIDER my_provider #undef TRACEPOINT_INCLUDE #define TRACEPOINT_INCLUDE "./tp.h" #if !defined(_TP_H) || defined(TRACEPOINT_HEADER_MULTI_READ) #define _TP_H #include <lttng/tracepoint.h> TRACEPOINT_EVENT( my_provider, my_first_tracepoint, TP_ARGS( int, my_integer_arg, char*, my_string_arg ), TP_FIELDS( ctf_string(my_string_field, my_string_arg) ctf_integer(int, my_integer_field, my_integer_arg) ) ) TRACEPOINT_EVENT( my_provider, my_other_tracepoint, TP_ARGS( int, my_int ), TP_FIELDS( ctf_integer(int, some_field, my_int) ) ) #endif /* _TP_H */ #include <lttng/tracepoint-event.h>

tp.c

#define TRACEPOINT_CREATE_PROBES #include "tp.h"

The two files above are defining a tracepoint provider. A tracepoint

provider is some sort of namespace for tracepoint definitions. Tracepoint

definitions are written above with the TRACEPOINT_EVENT() macro, and allow

eventual tracepoint() calls respecting their definitions to be inserted

into the user application’s C source code (we explore this in a

later section).

Many tracepoint definitions may be part of the same tracepoint provider and many tracepoint providers may coexist in a user space application. A tracepoint provider is packaged either:

-

directly into an existing user application’s C source file

-

as an object file

-

as a static library

-

as a shared library

The two files above, tp.h and tp.c, show a typical template for

writing a tracepoint provider. LTTng-UST was designed so that two

tracepoint providers should not be defined in the same header file.

We will now go through the various parts of the above files and give them a meaning. As you may have noticed, the LTTng-UST API for C/C++ applications is some preprocessor sorcery. The LTTng-UST macros used in your application and those in the LTTng-UST headers are combined to produce actual source code needed to make tracing possible using LTTng.

Let’s start with the header file, tp.h. It begins with

#undef TRACEPOINT_PROVIDER #define TRACEPOINT_PROVIDER my_provider

TRACEPOINT_PROVIDER defines the name of the provider to which the

following tracepoint definitions belong. It is used internally by

LTTng-UST headers and must be defined. Since TRACEPOINT_PROVIDER

could have been defined by another header file also included by the same

C source file, the best practice is to undefine it first.

Note:Names in LTTng-UST follow the C identifier syntax (starting with a letter and containing either letters, numbers or underscores); they are not C strings (not surrounded by double quotes). This is because LTTng-UST macros use those identifier-like strings to create symbols (named types and variables).

The tracepoint provider is a group of tracepoint definitions; its chosen

name should reflect this. A hierarchy like Java packages is recommended,

using underscores instead of dots, for example,

org_company_project_component.

Next is TRACEPOINT_INCLUDE:

#undef TRACEPOINT_INCLUDE #define TRACEPOINT_INCLUDE "./tp.h"

This little bit of instrospection is needed by LTTng-UST to include your header at various predefined places.

Include guard follows:

#if !defined(_TP_H) || defined(TRACEPOINT_HEADER_MULTI_READ) #define _TP_H

Add these precompiler conditionals to ensure the tracepoint event generation can include this file more than once.

The TRACEPOINT_EVENT() macro is defined in a LTTng-UST header file which

must be included:

#include <lttng/tracepoint.h>

This also allows the application to use the tracepoint() macro.

Next is a list of TRACEPOINT_EVENT() macro calls which create the

actual tracepoint definitions. We skip this for the moment and

come back to how to use TRACEPOINT_EVENT()

in a later section. Just pay attention to

the first argument: it’s always the name of the tracepoint provider

being defined in this header file.

End of include guard:

#endif /* _TP_H */

Finally, include <lttng/tracepoint-event.h> to expand the macros:

#include <lttng/tracepoint-event.h>

That’s it for tp.h. Of course, this is only a header file; it must be

included in some C source file to actually use it. This is the job of

tp.c:

#define TRACEPOINT_CREATE_PROBES #include "tp.h"

When TRACEPOINT_CREATE_PROBES is defined, the macros used in tp.h,

which is included just after, actually create the source code for

LTTng-UST probes (global data structures and functions) out of your

tracepoint definitions. How exactly this is done is out of this text’s scope.

TRACEPOINT_CREATE_PROBES is discussed further

in

Building/linking tracepoint providers and the user application.

You could include other header files like tp.h here to create the probes

of different tracepoint providers, for example:

#define TRACEPOINT_CREATE_PROBES #include "tp1.h" #include "tp2.h"

The rule is: probes of a given tracepoint provider

must be created in exactly one source file. This source file could be one

of your project’s; it doesn’t have to be on its own like

tp.c, although

a later section

shows that doing so allows packaging the tracepoint providers

independently and keep them out of your application, also making it

possible to reuse them between projects.

The following sections explain how to define tracepoints, how to use the

tracepoint() macro to instrument your user space C application and how

to build/link tracepoint providers and your application with LTTng-UST

support.

Using lttng-gen-tp

LTTng-UST ships with lttng-gen-tp, a handy command line utility for

generating most of the stuff discussed above. It takes a template file,

with a name usually ending with the .tp extension, containing only

tracepoint definitions, and outputs a tracepoint provider (either a C

source file or a precompiled object file) with its header file.

lttng-gen-tp should suffice in static linking

situations. When using it, write a template file containing a list of

TRACEPOINT_EVENT() macro calls. The tool finds the provider names

used and generate the appropriate files which are going to look a lot

like tp.h and tp.c above.

Just call lttng-gen-tp like this:

lttng-gen-tp my-template.tp

my-template.c, my-template.o and my-template.h

are created in the same directory.

You may specify custom C flags passed to the compiler invoked by

lttng-gen-tp using the CFLAGS environment variable:

CFLAGS=-I/custom/include/path lttng-gen-tp my-template.tp

For more information on lttng-gen-tp, see lttng-gen-tp(1).

Defining tracepoints

As written in Tracepoint provider,

tracepoints are defined using the

TRACEPOINT_EVENT() macro. Each tracepoint, when called using the

tracepoint() macro in the actual application’s source code, generates

a specific event type with its own fields.

Let’s have another look at the example above, with a few added comments:

TRACEPOINT_EVENT( /* tracepoint provider name */ my_provider, /* tracepoint/event name */ my_first_tracepoint, /* list of tracepoint arguments */ TP_ARGS( int, my_integer_arg, char*, my_string_arg ), /* list of fields of eventual event */ TP_FIELDS( ctf_string(my_string_field, my_string_arg) ctf_integer(int, my_integer_field, my_integer_arg) ) )

The tracepoint provider name must match the name of the tracepoint

provider in which this tracepoint is defined

(see Tracepoint provider). In other words,

always use the same string as the value of TRACEPOINT_PROVIDER above.

The tracepoint name becomes the event name once events are recorded by the LTTng-UST tracer. It must follow the tracepoint provider name syntax: start with a letter and contain either letters, numbers or underscores. Two tracepoints under the same provider cannot have the same name. In other words, you cannot overload a tracepoint like you would overload functions and methods in C++/Java.

Note:The concatenation of the tracepoint provider name and the tracepoint name cannot exceed 254 characters. If it does, the instrumented application compiles and runs, but LTTng issues multiple warnings and you could experience serious problems.

The list of tracepoint arguments gives this tracepoint its signature:

see it like the declaration of a C function. The format of TP_ARGS()

arguments is: C type, then argument name; repeat as needed, up to ten

times. For example, if we were to replicate the signature of C standard

library’s fseek(), the TP_ARGS() part would look like:

TP_ARGS( FILE*, stream, long int, offset, int, origin ),

Of course, you need to include appropriate header files before

the TRACEPOINT_EVENT() macro calls if any argument has a complex type.

TP_ARGS() may not be omitted, but may be empty. TP_ARGS(void) is

also accepted.

The list of fields is where the fun really begins. The fields defined in this list are the fields of the events generated by the execution of this tracepoint. Each tracepoint field definition has a C argument expression which is evaluated when the execution reaches the tracepoint. Tracepoint arguments may be used freely in those argument expressions, but they don’t have to.

There are several types of tracepoint fields available. The macros to define them are given and explained in the LTTng-UST library reference section.

Field names must follow the standard C identifier syntax: letter, then optional sequence of letters, numbers or underscores. Each field must have a different name.

Those ctf_*() macros are added to the TP_FIELDS() part of

TRACEPOINT_EVENT(). Note that they are not delimited by commas.

TP_FIELDS() may be empty, but the TP_FIELDS(void) form is not

accepted.

The following snippet shows how argument expressions may be used in tracepoint fields and how they may refer freely to tracepoint arguments.

/* for struct stat */ #include <sys/types.h> #include <sys/stat.h> #include <unistd.h> TRACEPOINT_EVENT( my_provider, my_tracepoint, TP_ARGS( int, my_int_arg, char*, my_str_arg, struct stat*, st ), TP_FIELDS( /* simple integer field with constant value */ ctf_integer( int, /* field C type */ my_constant_field, /* field name */ 23 + 17 /* argument expression */ ) /* my_int_arg tracepoint argument */ ctf_integer( int, my_int_arg_field, my_int_arg ) /* my_int_arg squared */ ctf_integer( int, my_int_arg_field2, my_int_arg * my_int_arg ) /* sum of first 4 characters of my_str_arg */ ctf_integer( int, sum4, my_str_arg[0] + my_str_arg[1] + my_str_arg[2] + my_str_arg[3] ) /* my_str_arg as string field */ ctf_string( my_str_arg_field, /* field name */ my_str_arg /* argument expression */ ) /* st_size member of st tracepoint argument, hexadecimal */ ctf_integer_hex( off_t, /* field C type */ size_field, /* field name */ st->st_size /* argument expression */ ) /* st_size member of st tracepoint argument, as double */ ctf_float( double, /* field C type */ size_dbl_field, /* field name */ (double) st->st_size /* argument expression */ ) /* half of my_str_arg string as text sequence */ ctf_sequence_text( char, /* element C type */ half_my_str_arg_field, /* field name */ my_str_arg, /* argument expression */ size_t, /* length expression C type */ strlen(my_str_arg) / 2 /* length expression */ ) ) )

As you can see, having a custom argument expression for each field makes tracepoints very flexible for tracing a user space C application. This tracepoint definition is reused later in this guide, when actually using tracepoints in a user space application.

Using tracepoint classes

In LTTng-UST, a tracepoint class is a class of tracepoints sharing the same field types and names. A tracepoint instance is one instance of such a declared tracepoint class, with its own event name and tracepoint provider name.

What is documented in Defining tracepoints is actually how to declare a tracepoint class and define a tracepoint instance at the same time. Without revealing the internals of LTTng-UST too much, it has to be noted that one serialization function is created for each tracepoint class. A serialization function is responsible for serializing the fields of a tracepoint into a sub-buffer when tracing. For various performance reasons, when your situation requires multiple tracepoints with different names, but with the same fields layout, the best practice is to manually create a tracepoint class and instantiate as many tracepoint instances as needed. One positive effect of such a design, amongst other advantages, is that all tracepoint instances of the same tracepoint class reuse the same serialization function, thus reducing cache pollution.

As an example, here are three tracepoint definitions as we know them:

TRACEPOINT_EVENT( my_app, get_account, TP_ARGS( int, userid, size_t, len ), TP_FIELDS( ctf_integer(int, userid, userid) ctf_integer(size_t, len, len) ) ) TRACEPOINT_EVENT( my_app, get_settings, TP_ARGS( int, userid, size_t, len ), TP_FIELDS( ctf_integer(int, userid, userid) ctf_integer(size_t, len, len) ) ) TRACEPOINT_EVENT( my_app, get_transaction, TP_ARGS( int, userid, size_t, len ), TP_FIELDS( ctf_integer(int, userid, userid) ctf_integer(size_t, len, len) ) )

In this case, three tracepoint classes are created, with one tracepoint

instance for each of them: get_account, get_settings and

get_transaction. However, they all share the same field names and

types. Declaring one tracepoint class and three tracepoint instances of

the latter is a better design choice:

/* the tracepoint class */ TRACEPOINT_EVENT_CLASS( /* tracepoint provider name */ my_app, /* tracepoint class name */ my_class, /* arguments */ TP_ARGS( int, userid, size_t, len ), /* fields */ TP_FIELDS( ctf_integer(int, userid, userid) ctf_integer(size_t, len, len) ) ) /* the tracepoint instances */ TRACEPOINT_EVENT_INSTANCE( /* tracepoint provider name */ my_app, /* tracepoint class name */ my_class, /* tracepoint/event name */ get_account, /* arguments */ TP_ARGS( int, userid, size_t, len ) ) TRACEPOINT_EVENT_INSTANCE( my_app, my_class, get_settings, TP_ARGS( int, userid, size_t, len ) ) TRACEPOINT_EVENT_INSTANCE( my_app, my_class, get_transaction, TP_ARGS( int, userid, size_t, len ) )

Of course, all those names and TP_ARGS() invocations are redundant,

but some C preprocessor magic can solve this:

#define MY_TRACEPOINT_ARGS \ TP_ARGS( \ int, userid, \ size_t, len \ ) TRACEPOINT_EVENT_CLASS( my_app, my_class, MY_TRACEPOINT_ARGS, TP_FIELDS( ctf_integer(int, userid, userid) ctf_integer(size_t, len, len) ) ) #define MY_APP_TRACEPOINT_INSTANCE(name) \ TRACEPOINT_EVENT_INSTANCE( \ my_app, \ my_class, \ name, \ MY_TRACEPOINT_ARGS \ ) MY_APP_TRACEPOINT_INSTANCE(get_account) MY_APP_TRACEPOINT_INSTANCE(get_settings) MY_APP_TRACEPOINT_INSTANCE(get_transaction)

Assigning log levels to tracepoints

Optionally, a log level can be assigned to a defined tracepoint. Assigning different levels of importance to tracepoints can be useful; when controlling tracing sessions, you can choose to only enable tracepoints falling into a specific log level range.

Log levels are assigned to defined tracepoints using the

TRACEPOINT_LOGLEVEL() macro. The latter must be used after having

used TRACEPOINT_EVENT() for a given tracepoint. The

TRACEPOINT_LOGLEVEL() macro has the following construct:

TRACEPOINT_LOGLEVEL(PROVIDER_NAME, TRACEPOINT_NAME, LOG_LEVEL)

where the first two arguments are the same as the first two arguments

of TRACEPOINT_EVENT() and LOG_LEVEL is one

of the values given in the

LTTng-UST library reference

section.

As an example, let’s assign a TRACE_DEBUG_UNIT log level to our

previous tracepoint definition:

TRACEPOINT_LOGLEVEL(my_provider, my_tracepoint, TRACE_DEBUG_UNIT)

Probing the application’s source code

Once tracepoints are properly defined within a tracepoint provider,

they may be inserted into the user application to be instrumented

using the tracepoint() macro. Its first argument is the tracepoint

provider name and its second is the tracepoint name. The next, optional

arguments are defined by the TP_ARGS() part of the definition of

the tracepoint to use.

As an example, let us again take the following tracepoint definition:

TRACEPOINT_EVENT( /* tracepoint provider name */ my_provider, /* tracepoint/event name */ my_first_tracepoint, /* list of tracepoint arguments */ TP_ARGS( int, my_integer_arg, char*, my_string_arg ), /* list of fields of eventual event */ TP_FIELDS( ctf_string(my_string_field, my_string_arg) ctf_integer(int, my_integer_field, my_integer_arg) ) )

Assuming this is part of a file named tp.h which defines the tracepoint

provider and which is included by tp.c, here’s a complete C application

calling this tracepoint (multiple times):

#define TRACEPOINT_DEFINE #include "tp.h" int main(int argc, char* argv[]) { int i; tracepoint(my_provider, my_first_tracepoint, 23, "Hello, World!"); for (i = 0; i < argc; ++i) { tracepoint(my_provider, my_first_tracepoint, i, argv[i]); } return 0; }

For each tracepoint provider, TRACEPOINT_DEFINE must be defined into

exactly one translation unit (C source file) of the user application,

before including the tracepoint provider header file. In other words,

for a given tracepoint provider, you cannot define TRACEPOINT_DEFINE,

and then include its header file in two separate C source files of

the same application. TRACEPOINT_DEFINE is discussed further in

Building/linking tracepoint providers and the user application.

As another example, remember this definition we wrote in a previous section (comments are stripped):

/* for struct stat */ #include <sys/types.h> #include <sys/stat.h> #include <unistd.h> TRACEPOINT_EVENT( my_provider, my_tracepoint, TP_ARGS( int, my_int_arg, char*, my_str_arg, struct stat*, st ), TP_FIELDS( ctf_integer(int, my_constant_field, 23 + 17) ctf_integer(int, my_int_arg_field, my_int_arg) ctf_integer(int, my_int_arg_field2, my_int_arg * my_int_arg) ctf_integer(int, sum4_field, my_str_arg[0] + my_str_arg[1] + my_str_arg[2] + my_str_arg[3]) ctf_string(my_str_arg_field, my_str_arg) ctf_integer_hex(off_t, size_field, st->st_size) ctf_float(double, size_dbl_field, (double) st->st_size) ctf_sequence_text(char, half_my_str_arg_field, my_str_arg, size_t, strlen(my_str_arg) / 2) ) )

Here’s an example of calling it:

#define TRACEPOINT_DEFINE #include "tp.h" int main(void) { struct stat s; stat("/etc/fstab", &s); tracepoint(my_provider, my_tracepoint, 23, "Hello, World!", &s); return 0; }

When viewing the trace, assuming the file size of /etc/fstab is

301 bytes, the event generated by the execution of this tracepoint

should have the following fields, in this order:

my_constant_field 40 my_int_arg_field 23 my_int_arg_field2 529 sum4_field 389 my_str_arg_field "Hello, World!" size_field 0x12d size_dbl_field 301.0 half_my_str_arg_field "Hello,"

Building/linking tracepoint providers and the user application

The final step of using LTTng-UST for tracing a user space C application (beside running the application) is building and linking tracepoint providers and the application itself.

As discussed above, the macros used by the user-written tracepoint provider

header file are useless until actually used to create probes code

(global data structures and functions) in a translation unit (C source file).

This is accomplished by defining TRACEPOINT_CREATE_PROBES in a translation

unit and then including the tracepoint provider header file.

When TRACEPOINT_CREATE_PROBES is defined, macros used and included by

the tracepoint provider header produce actual source code needed by any

application using the defined tracepoints. Defining

TRACEPOINT_CREATE_PROBES produces code used when registering

tracepoint providers when the tracepoint provider package loads.

The other important definition is TRACEPOINT_DEFINE. This one creates

global, per-tracepoint structures referencing the tracepoint providers

data. Those structures are required by the actual functions inserted

where tracepoint() macros are placed and need to be defined by the

instrumented application.

Both TRACEPOINT_CREATE_PROBES and TRACEPOINT_DEFINE need to be defined

at some places in order to trace a user space C application using LTTng.

Although explaining their exact mechanism is beyond the scope of this

document, the reason they both exist separately is to allow the trace

providers to be packaged as a shared object (dynamically loaded library).

There are two ways to compile and link the tracepoint providers with the application: statically or dynamically. Both methods are covered in the following subsections.

Static linking the tracepoint providers to the application

With the static linking method, compiled tracepoint providers are copied into the target application. There are three ways to do this:

-

Use one of your existing C source files to create probes.

-

Create probes in a separate C source file and build it as an object file to be linked with the application (more decoupled).

-

Create probes in a separate C source file, build it as an object file and archive it to create a static library (more decoupled, more portable).

The first approach is to define TRACEPOINT_CREATE_PROBES and include

your tracepoint provider(s) header file(s) directly into an existing C

source file. Here’s an example:

#include <stdlib.h> #include <stdio.h> /* ... */ #define TRACEPOINT_CREATE_PROBES #define TRACEPOINT_DEFINE #include "tp.h" /* ... */ int my_func(int a, const char* b) { /* ... */ tracepoint(my_provider, my_tracepoint, buf, sz, limit, &tt) /* ... */ } /* ... */

Again, before including a given tracepoint provider header file,

TRACEPOINT_CREATE_PROBES and TRACEPOINT_DEFINE must be defined in

one, and only one, translation unit. Other C source files of the

same application may include tp.h to use tracepoints with

the tracepoint() macro, but must not define

TRACEPOINT_CREATE_PROBES/TRACEPOINT_DEFINE again.

This translation unit may be built as an object file by making sure to

add . to the include path:

gcc -c -I. file.c

The second approach is to isolate the tracepoint provider code into a separate object file by using a dedicated C source file to create probes:

#define TRACEPOINT_CREATE_PROBES #include "tp.h"

TRACEPOINT_DEFINE must be defined by a translation unit of the

application. Since we’re talking about static linking here, it could as

well be defined directly in the file above, before #include "tp.h":

#define TRACEPOINT_CREATE_PROBES #define TRACEPOINT_DEFINE #include "tp.h"

This is actually what lttng-gen-tp does, and is

the recommended practice.

Build the tracepoint provider:

gcc -c -I. tp.c

Finally, the resulting object file may be archived to create a more portable tracepoint provider static library:

ar rc tp.a tp.o